The following is an example of how to implement RZ/V2H implementation, but it can be the same way in RZ/V2N.

If you would like to view tutorials for previous versions, please see here.

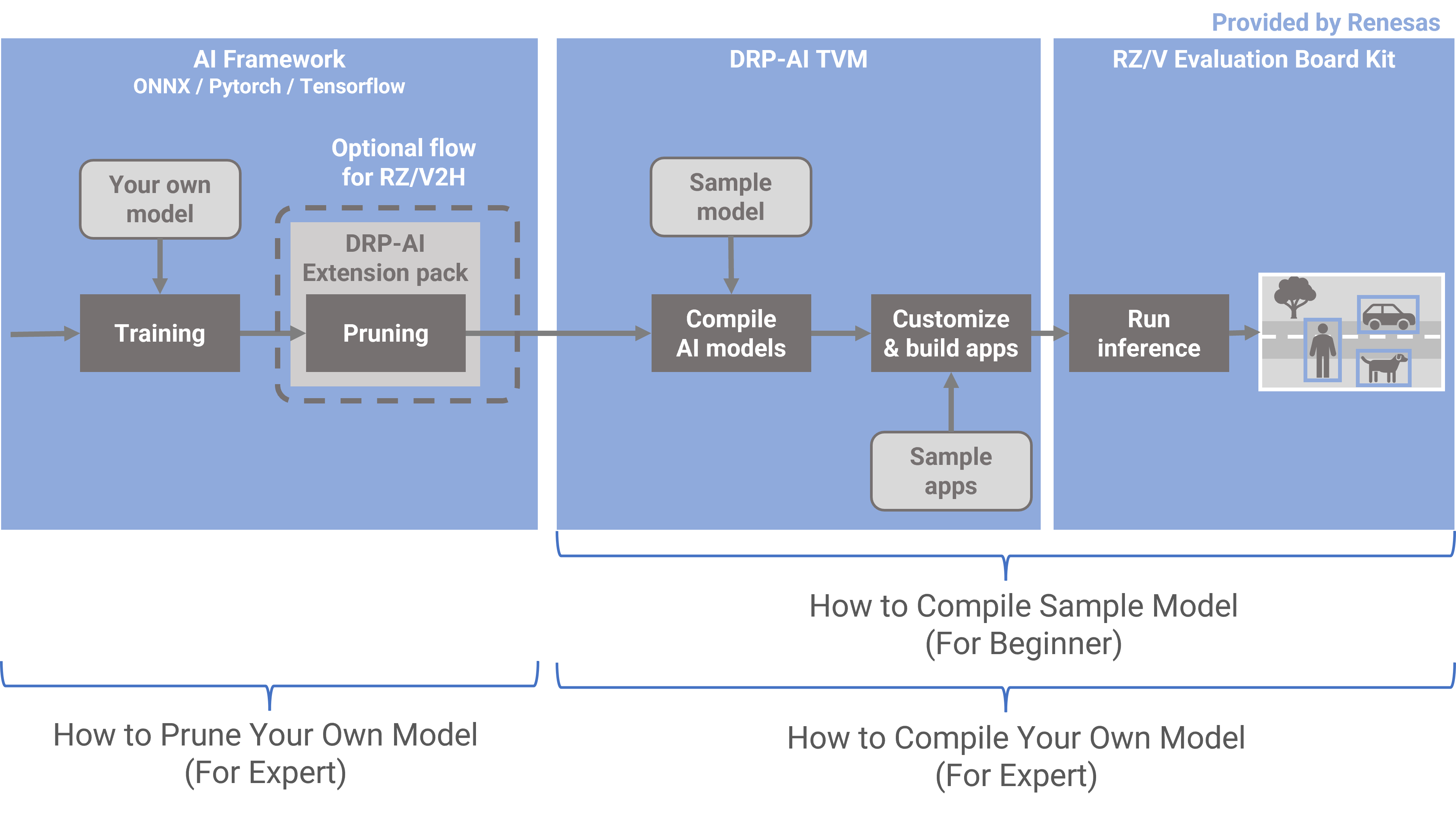

There are 3 tutorials about AI deployment tools for RZ/V2H.

- For easy understanding DRP-AI TVM by using sample model, please follow instructions on this page.

- For understanding how to prune your own model, please click here.

- For understanding how to compile your own model, please click here.

Video

The tools used in this video are not the latest versions, but the content is still useful as a reference when using the latest tools.

Getting Started (RZ/V2H)

Requirements

Requirements are listed below.

| OS | Ubuntu 22.04 |

| Python | 3.10 |

| Package | git |

| Container Runtime | Docker |

| Evaluation Board | RZ/V2H EVK |

| Related Software Version | ● DRP-AI TVM v2.7.0 ● DRP-AI Translator i8 v1.11 : DRP-AI_Translator_i8-v1.11-Linux-x86_64-Install or later. ● RZ/V2H AI SDK v6.00 : RTK0EF0180F06000SJ.zip or later. ● DRP-AI Extension Pack (Pruning Tool) v1.30 or later |

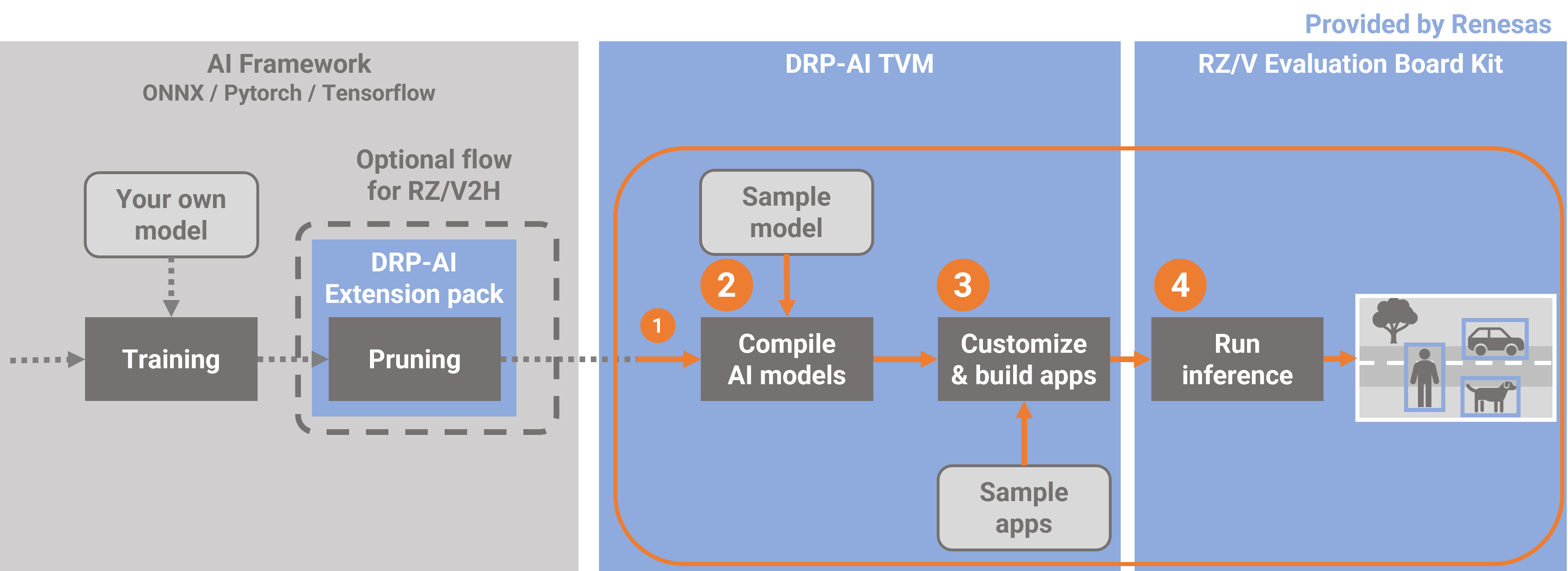

Work flow

From this point on, we will follow these steps.

1. Setup Environment

Preparing a workspace

Prepare a workspace for following steps.

$ mkdir tvm_work

$ cd tvm_work1-1. Download the required installers

Download DRP-AI Translator i8 v1.11 from below.

https://www.renesas.com/software-tool/drp-ai-translator-i8

$ cp $HOME/Download/r20ut5460ej0101-drp-ai-translator-i8.zip ./

$ unzip r20ut5460ej0101-drp-ai-translator-i8.zip DRP*Download RZ/V2H AI SDK v6.00 (poky) from below.

https://www.renesas.com/us/en/software-tool/rzv2h-ai-software-development-kit

$ cp $HOME/Download/RTK0EF0180F06000SJ.zip ./

$ unzip RTK0EF0180F06000SJ.zip */poky*sh

$ mv ai_sdk_setup/* .1-2. Download a dockerfile

Download a Dockerfile from repository.

$ wget https://raw.githubusercontent.com/renesas-rz/rzv_drp-ai_tvm/main/DockerfileV2H1-3. Build the docker image

Build the docker image which is downloaded in the previous step.

$ sudo docker build -t drp-ai_tvm_${PRODUCT,,}_image_${USER} -f Dockerfile* --build-arg PRODUCT=${PRODUCT} .1-4. Create and run a new container from the docker image

Create a container from the docker image built in the previous step.

You can enter the created container by executing the following command.

$ sudo docker run -it --name drp-ai_tvm_${PRODUCT,,}_container_${USER} drp-ai_tvm_${PRODUCT,,}_image_${USER}2. Compile AI models

All subsequent operations will be performed within the container you have created in Chapter1.

2-1. Download a onnx model

In this case, resnet18 is used as an example.

First, download the ONNX model file from the following URL with the following command.

root@docker_hostname:# cd $TVM_ROOT/tutorials/

root@docker_hostname:# wget https://github.com/onnx/models/raw/main/validated/vision/classification/resnet/model/resnet18-v1-7.onnx2-2. Compile a onnx model

Compile the ONNX format model you have just downloaded with the sample script already prepared.

root@docker_hostname:# python3 compile_onnx_model_quant.py ./resnet18-v1-7.onnx -o resnet18_onnx -t $SDK -d $TRANSLATOR -c $QUANTIZER --images $TRANSLATOR/../GettingStarted/tutorials/calibrate_sample/Confirming the output

root@docker_hostname:# ls resnet18_onnx

input_0.bin preprocess interpreter_out project.mdp mera.plan sub_0000__CPU_DRP_TVM model_subgraphs.json3. Customize & build apps

3-1. Preparing build environment

Prepare an environment for building.

$ cd $TVM_ROOT/apps

$ mkdir build

$ cd build$ cmake -DCMAKE_TOOLCHAIN_FILE=./toolchain/runtime.cmake -DV2H=ON ..3-2. Build a sample application

Next, build an application to run the compiled model.

$ make -j$(nproc)Confirming the build artifact tutorial_app.

$ ls

CMakeCache.txt CMakeFiles Makefile cmake_install.cmake tutorial_app3-3. Preparing the necessary files to run on the board

Prepare the necessary files to run on the board.

$ cd $TVM_ROOT/../

$ mkdir -p tvm/lib$ cp $TVM_ROOT/obj/build_runtime/v2h/lib/* tvm/lib/.

$ cp $TVM_ROOT/apps/exe/sample.bmp tvm/

$ cp $TVM_ROOT/apps/exe/ImageNetLabels.txt tvm/

$ cp $TVM_ROOT/apps/exe/synset_words_imagenet.txt tvm/

$ cp $TVM_ROOT/apps/build/tutorial_app* tvm/

$ cp -r $TVM_ROOT/tutorials/resnet18_onnx tvm/

$ tar acvf tvm.tar.gz tvm/Confirming the tvm.tar.gz

$ ls

tvm tvm.tar.gz4. Run inference

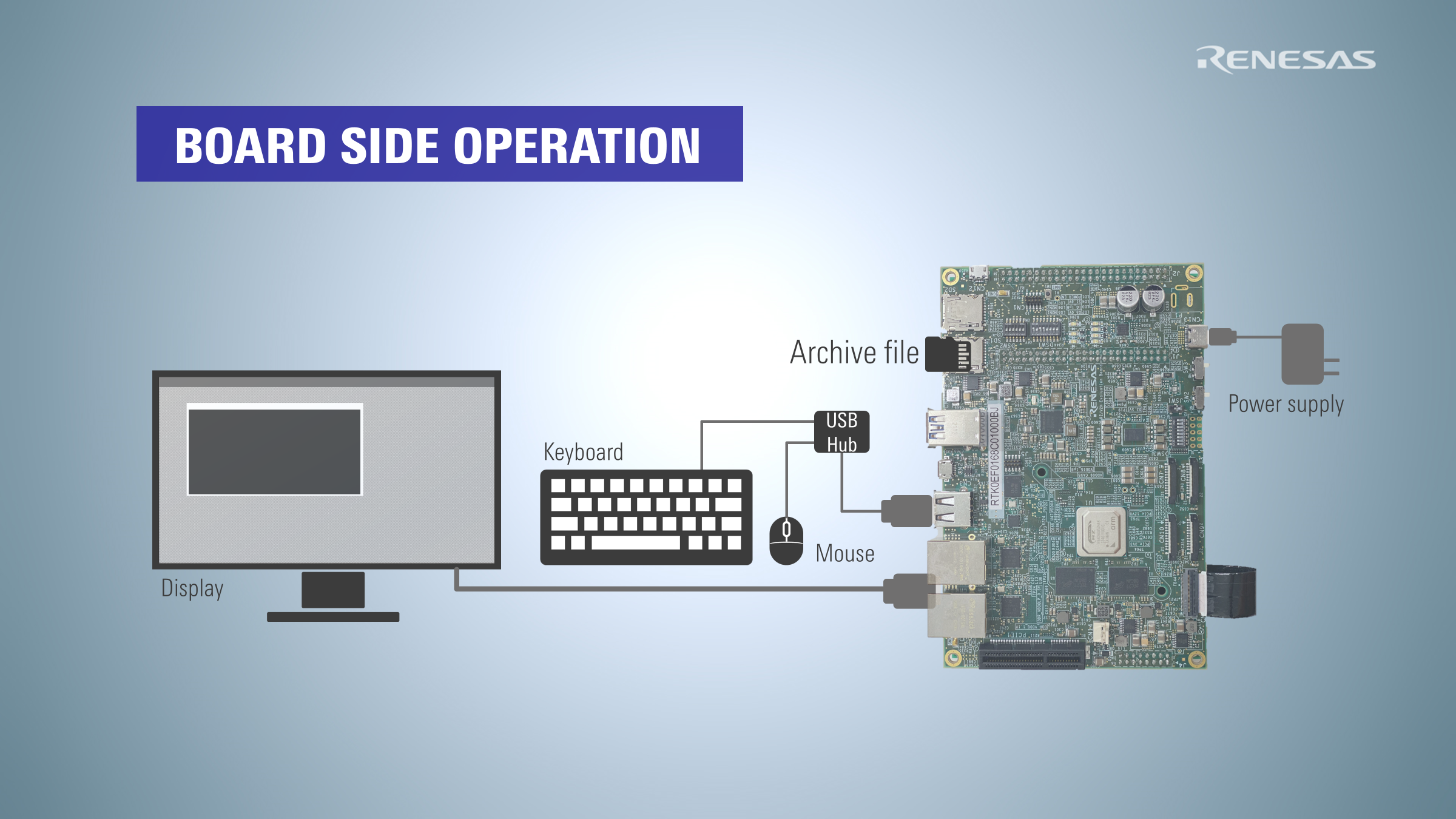

4-1. Setup the target board

Please refer to the following pages to set up your board.

First, follow the step on this page.

Next, follow only step11 for ip address setting on this page.

4-2. Copy to the board

Copy the files you have just prepared to the board.

$ scp ./data/tvm.tar.gz root@192.168.1.11:/root - Board-side operation -

From here, the board is operated.

Operate the board directly or connect to the board for operation.

4-3. Unzip the files

Unzip the files copied to the board.

root@rzv2h-evk...:# cd /root/

root@rzv2h-evk...:# tar xvfz tvm.tar.gz

root@rzv2h-evk...:# cd ./tvm4-4. Run the application

Execute the following command to start the application.

root@rzv2h-evk...:# export LD_LIBRARY_PATH=./lib/.

root@rzv2h-evk...:# ./tutorial_appThe application runs the ResNet inference on sample.bmp.

Following is the expected output for ResNet50 ONNX model compiled for DRP-AI on RZ/V2H Evaluation Board Kit.

[2025-05-29 20:26:47.352] [console] [info] MERA 2.0 Runtime

[TIME] PreRuntime DRP-AI processing time : 1.11 msec

[TIME] GetResult() Processing Time : 0.75 msec

[TIME] Pre Processing Time: 2.42 msec.

Running tvm runtime (freq_index=1, zero_copy=off)

[TIME] AI Processing Time: 2.62 msec.

Output data type : FP16.

Result ----------------------

Top 1 [ 69.2%] : [beagle]

Top 2 [ 11.9%] : [English foxhound]

Top 3 [ 4.2%] : [basset, basset hound]

Top 4 [ 0.6%] : [bluetick]

Top 5 [ 0.5%] : [bloodhound, sleuthhound]Here is the end of the workflow.

Appendix

Detailed about the files to required to run on the board.

| Name | Path | Details |

|---|---|---|

| Runtime Library | drp-ai_tvm/obj/build_runtime/${PRODUCT}/lib/* |

Binary provided under obj directory.You should use the libraries in the directory with the corresponding product name. |

| Model Data | drp-ai_tvm/tutorials/resnet* |

Model compiled in the Compile AI models. DRP-AI Preprocessing Runtime Object files, (preprocess directory) are also included. |

| Input Data | drp-ai_tvm/apps/exe/sample.bmp |

Windows Bitmap file, which is input data for image classification. |

| Label List | drp-ai_tvm/apps/exe/synset_words_imagenet.txtdrp-ai_tvm/apps/exe/ImageNetLabels.txt |

synset_words_imagenet.txt:Label list for ResNet18 post-processing.ImageNetLabels.txt:Label list for ResNet50 post-processing when compiling Tensorflow Hub model. |

| Application | drp-ai_tvm/apps/build/tutorial_app |

Compiled in this page. |